Definition & Ethos

Vibe coding is AI-assisted software development where you express intent in natural language and direct the AI to draft code. You describe outcomes, constraints, and user expectations; the model proposes the implementation, and you steer the edits.

Popularized by Andrej Karpathy in early 2025, it shifts the role from authoring every line to directing outcomes. You focus on behavior, constraints, and user experience while the AI handles the first draft. The workflow feels closer to creative direction: ask, review, refine, and tighten.

It is not no-code. You still own architecture, boundaries, and correctness. The code is a proposal until it passes your vibe check and objective verification. You are accountable for what ships, not the model. Expect to edit, test, and sometimes reject whole approaches.

- Intent: You define the goal and the acceptance criteria.

- Control: You decide scope, files, and what must stay untouched.

- Proof: You validate through diffs, tests, and real behavior.

The Shift

- Syntax becomes intent.

- Manual edits become diff review.

- Compile errors become behavior bugs.

- Solo building becomes dialog with the model.

Director mindset

You define intent, constraints, and acceptance. The AI drafts, you approve.

Small scopes

Short prompts and tight diffs keep output clean and controllable.

Verify by default

Run it, read the diff, and validate with tests before moving on.

State discipline

Commit often, keep logs, and preserve rollback options.

When to Vibe vs When to Code

Use vibe coding when speed, experimentation, and rapid feedback matter most. It shines when you can tolerate iteration and rework while exploring a product surface or validating assumptions. The cost of a wrong turn is low, and the learning value is high.

Shift to traditional or hybrid workflows when reliability, security, or long-term maintainability become the priority. The higher the blast radius, the more you should design before you generate. Think of vibe coding as a generator, not the final authority. If requirements are unclear, slow down and clarify before you prompt.

- Risk tolerance: Can you safely roll back if the change is wrong?

- Scope clarity: Do you know the boundaries and acceptance checks?

- Lifecycle: Is this a short-lived experiment or a long-term system?

Great Fits

- MVPs, demos, and investor prototypes

- Internal tools and automation scripts

- UI iterations and content-heavy pages

- Data cleanup or one-off migrations with review

- Documentation scaffolding and onboarding guides

- Refactors with clear tests and boundaries

Use Traditional or Hybrid

- Safety-critical or regulated systems without review

- Performance-critical algorithms and low-level optimizations

- Large-scale architecture decisions without human design

- Security-heavy auth or cryptography without experts

- Ambiguous requirements or unresolved product questions

- Long-lived systems with no tests or documentation

The Vibe Loop

This loop is the operational backbone of vibe coding: a tight cycle of intent, generation, and verification. Treat each pass as a hypothesis. If the model proposes a change, you prove or reject it quickly. The loop is not a brainstorm; it is a narrowing funnel.

Keep cycles short and scope frozen. When the model starts expanding the spec, reset the prompt or slice the work smaller. Exit the loop only when behavior, UX, and test signals converge, not just when the model looks right. Track what changed each pass to prevent drift.

- Exit signals: readable diff, tests pass, UX matches, edge cases sanity-checked.

Frame the outcome

Define the goal, constraints, and the user-facing result.

Scope the change

Identify files, boundaries, and what must stay untouched.

Generate

Let the model draft or modify the code in one focused pass.

Vibe check

Run the app and validate behavior, layout, and edge cases.

Objective checks

Review diff, run tests, validate performance and security.

Integrate

Commit, document, and set the next iteration target.

Prompting Playbook

High-quality prompts are compact specs: they define the outcome, draw boundaries, and include a success checklist. When you encode constraints (stack, style, non-goals), the model stops guessing and starts aligning. The prompt is part of your engineering system, so treat it like a design doc that can be reviewed.

Provide just enough context to anchor the model in reality: key files, naming conventions, and what must not change. When a change is risky, ask for a plan first, then approve a smaller, explicit diff. You are steering intent, not filling in prose. If you cannot measure success, make the task smaller.

- Include: goal, constraints, non-goals, inputs/outputs, acceptance checks.

Prompt DNA

- Goal: Describe the user outcome and success criteria.

- Constraints: List stack, libraries, patterns, and non-negotiables.

- Context: Reference relevant files, APIs, or data shapes.

- Inputs/Outputs: Define data inputs, outputs, and expected behaviors.

- Acceptance: Bullet the checks that must pass before you merge.

- Non-goals: Call out what not to change or add.

Prompt Template

Role: You are maintaining this repo. Goal: <what the user should be able to do> Constraints: <stack, libraries, style rules> Context: <files, endpoints, data models> Files: <what can change / what must not> Acceptance: <tests, UI checks, edge cases> Non-goals: <explicitly out of scope> Deliverable: <patches + brief summary>

Ask for a plan before code

Have the model outline the approach and files touched.

One feature per prompt

Keep scope tight to prevent accidental regressions.

Diff-first requests

Ask for patches or small diffs, not whole file rewrites.

Provide sample data

Realistic inputs produce more accurate logic and UI.

Force acceptance criteria

Require explicit checks for success and failure states.

Ask for risks

Have the model list potential regressions or trade-offs.

Quality Gates

A vibe check catches obvious issues, but it cannot prove correctness. Quality gates convert subjective confidence into measurable validation. Think of them as deliberate friction: each gate forces a pause before you scale the change.

Order matters. Read the diff before running tests so you know exactly what changed. Then run tests, manually check the UX, and probe edge cases. If a gate fails, fix the root cause and rerun the gate instead of stacking more changes. Gates should scale with risk, not with ego.

- Minimum bar: diff review, local run, one edge-case check.

Vibe check

- Core flow works end-to-end

- UI spacing, copy, and states feel right

- No console errors or broken links

- Error states are clear and helpful

Objective checks

- Read the diff for unexpected changes

- Run relevant tests and commands

- Validate performance-sensitive paths

- Confirm dependencies are real and needed

Release ready

- Docs and handoff notes updated

- Rollback plan or previous commit ready

- Monitoring or logging added if needed

- Decision on follow-up tasks recorded

Debugging & Recovery

Debugging with AI works best when you force clarity. Always reproduce the bug, isolate the failing file or function, and ask for a targeted fix. The model is strongest when the problem surface is small and the evidence is concrete.

Large rewrites feel productive but often hide regressions. Ask for a minimal patch, apply it, and rerun the repro. If you are stuck, roll back and reframe the prompt with new evidence instead of piling on guesses. Anchor the fix to a failing test or log snippet.

- Bring: repro steps, expected vs actual, error logs, environment details.

Triage Ladder

- Reproduce with a minimal case.

- Read the diff and isolate the regression.

- Add lightweight logging or assertions.

- Ask for a targeted fix with constraints.

- If it loops, revert and reframe the prompt.

Common Failure Modes

- Hallucinated dependencies or APIs.

- Model drift from the original intent.

- Large rewrites that hide regressions.

- Overprompting that creates tangled logic.

- Silent breaks in edge cases or error states.

Workflow Hygiene

Workflow hygiene is how you keep velocity without chaos. These habits preserve context, protect against regressions, and make it easy to hand off work to another human or another model. Hygiene is the difference between “fast” and “fragile.”

Treat prompts as artifacts: store them, name them, and connect them to commits. Keep diffs small and commit often so you can unwind mistakes without losing hours. If you only adopt a few habits, start with commits and prompt logs. Timebox sessions to avoid context sprawl.

- High ROI: prompt log, tight branch scope, frequent rollback points.

Commit like checkpoints

Save every stable milestone so you can undo quickly.

Keep a prompt log

Record prompts and outcomes for traceability.

Track TODOs explicitly

Use a task list to avoid half-done work.

Reset context when it drifts

Start a new session with a clean summary if answers degrade.

Keep diffs small

Large diffs hide bugs and slow down review.

Document decisions

Write down why you chose an approach or trade-off.

Handoff Format

Context: <short summary of goal> Changes: <files touched + why> Checks: <tests or commands run> Open Issues: <what is left> Next Prompt: <recommended next instruction>

The Vibe Stack

Tooling should reflect the stage of work. Use fast editors and browser agents to explore, then bring in CLI agents and repo search to verify, refactor, and harden. Keep the model close to real files and real constraints to avoid hallucinated gaps.

The best stack minimizes translation between idea, code, and validation. If a tool cannot read the repo or run the code, it will drift. Bias toward tools that can touch the same files you will ship. Avoid tools that only summarize without file access.

- Pick by need: UI feel, repo-wide changes, or verification speed.

AI IDEs

Deep repo awareness and fast generation inside your editor.

- Cursor

- Windsurf

- VS Code with Claude/Copilot

CLI agents

Run tasks in terminal with file access and automation.

- Claude Code

- Custom scripts + prompts

Browser builders

Rapid UI and full-stack prototypes in the browser.

- Bolt

- Lovable

- Replit Agent

Model strategy

Use fast models for drafts, strong models for reviews.

- Draft quickly

- Review slowly

- Validate with tests

Repo intelligence

Use fast search and tree views to ground prompts.

- rg for search

- tree for structure

- clear file paths

MCP servers

Give models controlled access to tools and data.

- GitHub or Git

- Filesystem

- Databases

- Docs

Prompt Recipes

Recipes are repeatable prompt structures that reduce variance and improve reliability. They let you express goals consistently while keeping scope tight and reviewable. If your team uses the same recipe, review becomes faster and more predictable.

Every recipe should be a template with slots for goal, constraints, files, and acceptance checks. Never paste a recipe blindly; adapt it to the repo rules and the specific change you want. The recipe is a scaffold, not a straightjacket. Retire recipes that drift or cause rework.

- Structure: context, task, constraints, outputs, verification.

Feature implementation

Constrain the stack and define acceptance criteria up front.

Goal: Add a compact pricing table section. Constraints: Use Tailwind utilities, no new dependencies. Files: Only edit app/pages/vibe-coding-guide.vue. Acceptance: Mobile and desktop layout look balanced, no console errors.

Bug fix with reproduction

Give the exact repro steps and expected output.

Bug: Button labels overlap on mobile. Repro: iPhone width 390px, open /vibe-coding-guide. Expected: Labels wrap without overlap. Fix: Adjust layout classes only, no new components.

Refactor safely

Request small diffs and preserve behavior.

Task: Reduce duplication in the checklist section. Constraints: Keep markup structure and visual output identical. Output: Provide a minimal patch with explanation.

Write tests first

Define tests before implementation changes.

Task: Add tests for the new workflow steps. Provide: Test plan + test cases before any code changes.

UX polish pass

Make the model focus on micro layout and copy.

Review the guide for layout balance and concise copy. List: 5 improvements with before/after snippets.

Security review

Ask for a focused audit and risk list.

Review the changes for security or data exposure risks. Return: A short risk list and suggested fixes.

Team Playbook

AI accelerates output, but teams still need accountability. Assign a clear owner for requirements, a builder for the prompt loop, and a reviewer who validates behavior and risk. This separation prevents model drift from turning into production debt.

Shared standards matter. Use a consistent prompt format and a consistent review checklist so everyone evaluates with the same bar. Rotate reviewers to reduce blind spots and keep quality expectations aligned across the team. Document decisions so constraints are visible later.

- Roles: Owner sets scope, Builder runs loop, Reviewer verifies.

Product Lead

- Owns requirements and acceptance criteria.

- Keeps scope aligned to real user outcomes.

- Signs off on final behavior.

Builder

- Directs the model and integrates changes.

- Runs the vibe loop and keeps diffs small.

- Documents decisions and open issues.

Reviewer

- Reads diffs, tests outcomes, and spots risks.

- Validates performance and security impacts.

- Approves or rejects with clear feedback.

Safety & Trust

AI is a powerful collaborator, not a trusted authority. You are responsible for security, privacy, and compliance, especially around data and dependencies. Treat model output like untrusted input until it is verified.

Classify data before you paste it. Use least-privilege tokens, audit what changes, and keep a rollback path. If the model touches dependencies, pin versions and review changelogs. If a tool reaches production data, add explicit red-team checks.

- Guardrails: no secrets in prompts, dependency pinning, change logs, backups.

- Treat AI output as untrusted until verified.

- Never paste secrets or production data into prompts.

- Verify licensing and ownership of generated assets.

- Lock down tool permissions to the smallest scope.

- Audit dependencies and remove unused packages.

- Maintain a rollback path for every release.

Vibe Coding Checklist

This checklist is the release brake at the end of every iteration. It ensures you have validated scope, reviewed changes, and captured follow-ups before you move on. Momentum is great, but it should never skip verification.

Use it even when the change feels tiny; most regressions come from “small” edits. Check the diff, run a test, sanity-check the UX, and record what is next. A short pause here saves hours later. After merge, capture follow-ups so the next loop starts clean.

- Before done: diff review, tests, UX sanity, docs, follow-ups.

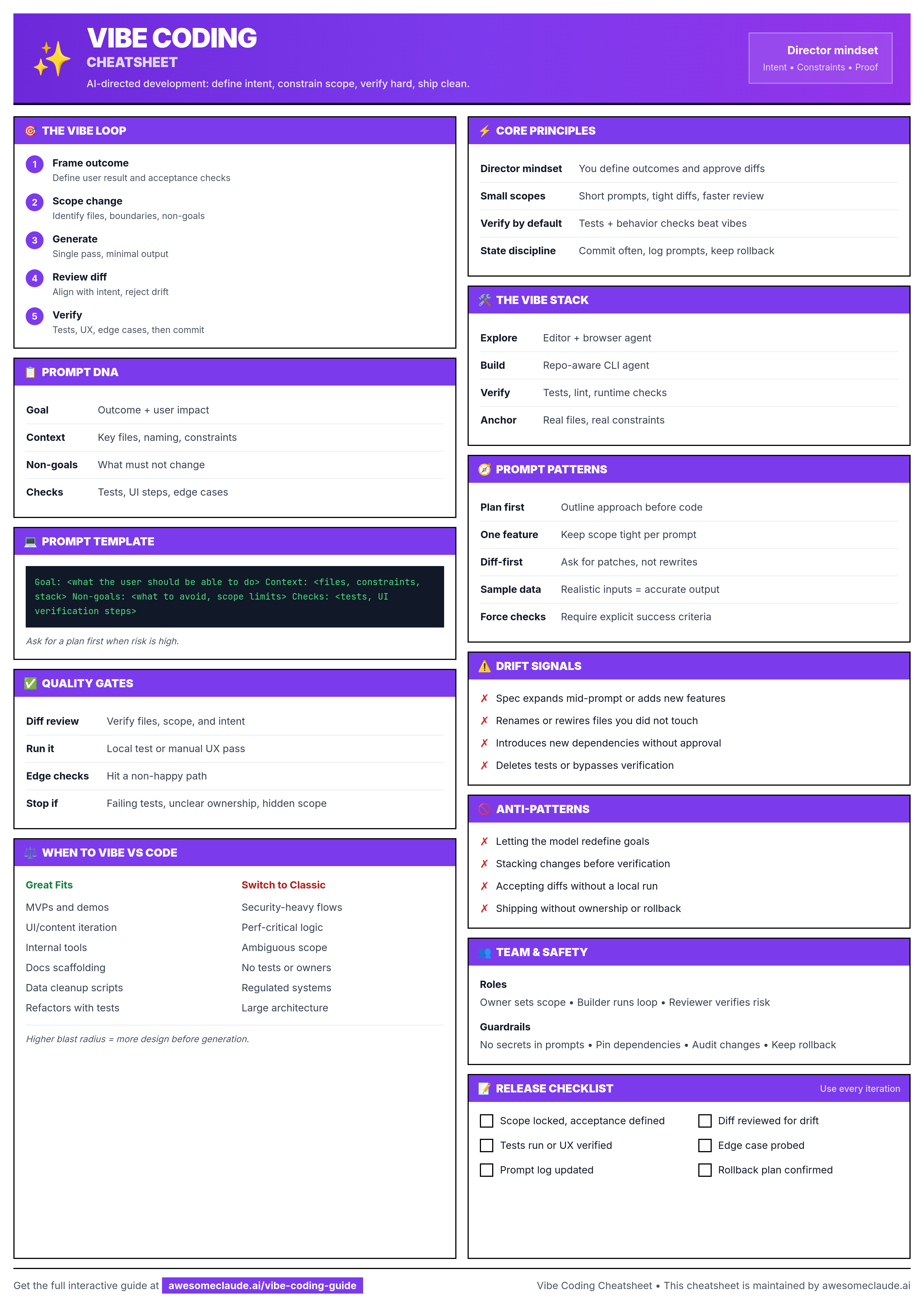

PDF & PNG Downloads

Printable Vibe Coding Cheatsheet

One-page reference with the core vibe loop, prompting DNA, quality gates, and release checklist. Designed for quick scans during builds.